| Version 3 (modified by kanani, 8 years ago) (diff) |

|---|

Code verification & benchmarking

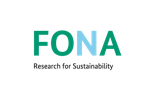

The procedure of testing the overall functionality of the PALM-4U components is organized into three categories:

Fig 1: Testing categories.

Simulation setups for category-1 tests

Category 1 includes checks of the PALM-4U source code for compliance to the general FORTRAN coding standard, as well as to the specific PALM formatting rules. Further, the code is tested for run-time errors as well as compiler-dependent functionality issues, and simulation results are checked for elementary plausibility. For this purpose small setups are sufficient, suitable to run on desktop PCs (2-4 processor cores) within a time frame of O(1-10min).

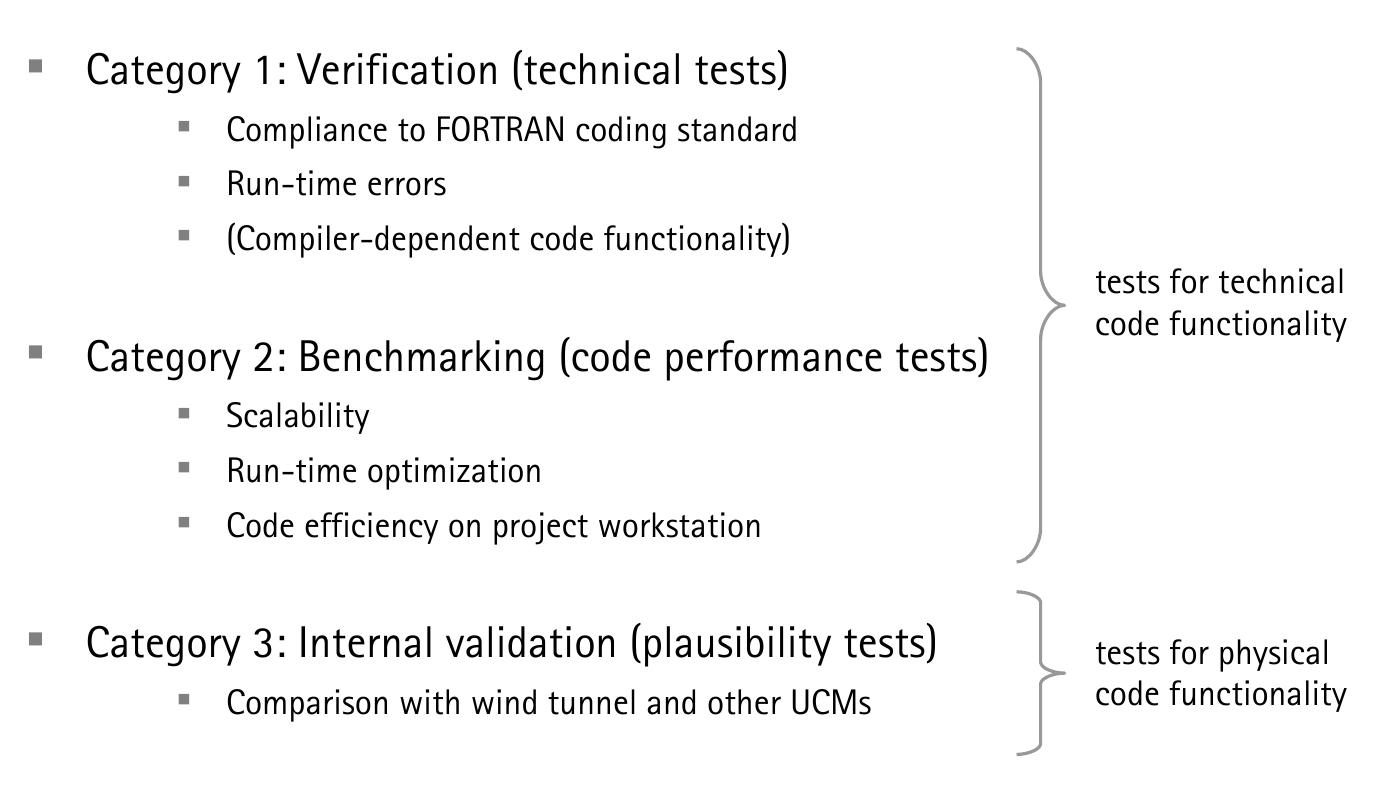

Three setups with different generic building topographies and tree distributions are available (see Fig. 2), making use of the urban surface model (USM), clear-sky radiation model, and plant canopy model (PCM). To summarize some of the setup features:

- Domain: 20 x 20 x 20 m³

- Grid size: 2m

- Moderate wind from west

- Clear-sky conditions, dry atmosphere (USM does not yet consider latent heat fluxes in surface energy balance)

- Cyclic lateral boundary conditions

Fig 2: (S)mall setups with different generic building topographies and tree distributions.

For each of the three setups, a [.zip?] archive can be downloaded, containing [INPUT, MONITORING, OUTPUT?] files and a [README] file with the setup documentation:

- generic_cube setup

- generic_canyon setup

- generic_crossing setup

Simulation setups for category-2 tests

Two differently sized setups are defined:

- (M)edium setup for run-time optimization tests, and verification of the code efficiency on the MOSAIK demonstration PC

- (L)arge setup for code scalability tests on a high-performance computing system (HLRN) with up to tens of thousands processor cores (see Fig. 3.

The base of PALM-4U -- PALM -- is highly optimized and its performance scales well up to 40,000 processor cores. PALM-4U shall maintain this high level of performance optimization.

Simulation setups for category-3 tests

These setups will be formulated once a decision has been made (in coordination with module B & C partners) which UCMs to apply. On MOSAIK's side, ENVIMET, FITNAH, and MUKLIMO_3 are on the shortlist. Selected setups must be compatible to run with PALM-4U as well as with the UCM of choice.

Attachments (12)

- testing_categories.png (128.0 KB) - added by kanani 8 years ago.

- generic_setups_small.png (74.1 KB) - added by kanani 8 years ago.

- generic_cube.zip (7.9 MB) - added by kanani 8 years ago.

- generic_canyon.zip (7.0 MB) - added by kanani 8 years ago.

- generic_crossing.zip (7.9 MB) - added by kanani 8 years ago.

- medium_complex_setup.png (1.4 MB) - added by kanani 8 years ago.

- large_complex_setup.png (952.6 KB) - added by kanani 8 years ago.

-

test_urban_r2770.zip

(11.8 MB) -

added by kanani 7 years ago.

Simulation setup for a generic crossing (working with r2770)

-

sketch_test_urban.png

(174.1 KB) -

added by kanani 7 years ago.

Sketch for test_urban setup

- test_urban_r2957.zip (30.3 MB) - added by kanani 7 years ago.

- test_urban.zip (31.0 MB) - added by suehring 7 years ago.

- sketch_test_urban.odg (88.1 KB) - added by kanani 6 years ago.